Scaling a system to support millions of users is a challenge that many businesses face as they grow. Creating a scalable, reliable infrastructure is crucial to handle high traffic and deliver a seamless user experience. In this article, we’ll walk through practical steps and architectural strategies to scale your system effectively, from database optimization and caching to distributed systems and load balancing. Let’s dive in!

|

| The Ultimate Guide to Scaling Your Application for Millions of Users |

1. Understand the Basics of Scalability

Scalability refers to a system’s ability to handle increased loads without compromising performance. Two Main Approaches to Achieving Scalability:

- Vertical Scaling (Scaling Up): Expanding the CPU, RAM, or storage capacity of a single server allows it to handle larger workloads.

- Horizontal Scaling (Scaling Out): Adding more servers to a system to distribute the load across multiple nodes, which is ideal for handling millions of users.

Horizontal scaling is often preferred for large-scale applications, as it offers better redundancy and fault tolerance. However, each method has its own benefits and can be used in tandem.

2. Optimize Database Performance

Databases often become bottlenecks as the number of users increases. Here are some database optimization strategies:

- Database Indexing: Indexes speed up data retrieval by allowing quick lookups of frequently accessed data. However, over-indexing can slow down write operations, so it’s essential to find a balance.

- Database Partitioning (Sharding): This involves splitting large databases into smaller, more manageable pieces (shards), each stored on a different server. Sharding allows horizontal scaling and improves query performance for large datasets.

- Replication: Database replication involves creating copies of data across multiple servers to improve read performance and ensure data redundancy.

- Use a Distributed Database: If your application handles a massive amount of data, consider using distributed databases like Cassandra, MongoDB, or Google Bigtable, which are designed to handle large-scale data across multiple nodes.

3. Implement Caching Solutions

Caching stores frequently accessed data in a fast, temporary storage layer, allowing quicker data retrieval and reducing database load. Popular caching strategies include:

- In-Memory Caching: Tools like Redis and Memcached store data in memory, allowing for high-speed data retrieval.

- Content Delivery Networks (CDNs): CDNs cache static content, such as images and stylesheets, at edge locations closer to users, reducing load times and server load.

- Application-Level Caching: Implement caching at the application level for frequently requested data that doesn’t change often, such as user profiles or settings.

Caching is particularly useful in scenarios with high read-to-write ratios, as it allows data to be fetched quickly without querying the database repeatedly.

4. Implement Load Balancing

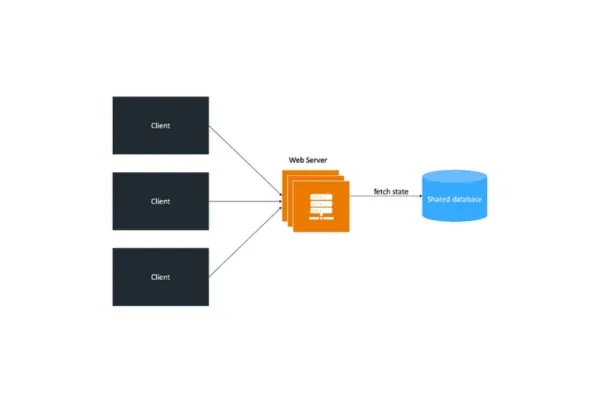

Load balancers help manage incoming traffic by spreading it across multiple servers, preventing any single server from becoming overloaded. They improve fault tolerance and allow for scaling by adding or removing servers as needed. Common load balancing algorithms include:

- Round Robin: Requests are distributed to each server in a sequential order.

- Least Connections: In this method, incoming requests are directed to the server with the fewest active connections, ensuring a more balanced load.

- IP Hashing: Requests from the same client IP are directed to the same server, useful for maintaining session consistency.

Popular load balancers include NGINX, HAProxy, and cloud-based options like AWS Elastic Load Balancing (ELB) and Google Cloud Load Balancing.

5. Adopt a Microservices Architecture

Microservices architecture breaks down an application into smaller, standalone services that communicate through APIs. Each service handles a specific function (e.g., user authentication, payments, etc.) and can be developed, deployed, and scaled independently. Benefits of microservices include:

- Independent Scaling: Each service can be scaled based on its own resource needs.

- Fault Isolation: Failures in one microservice don’t bring down the entire application.

- Technology Flexibility: Teams can use different technologies for different services, depending on requirements.

Popular frameworks for building microservices include Spring Boot (Java), Express (Node.js), and Flask (Python).

6. Use Asynchronous Processing for Resource-Intensive Tasks

For tasks that require substantial resources or time, like data processing or image resizing, consider moving them to background jobs. This approach keeps user-facing operations responsive. Asynchronous processing can be implemented using:

- Message Queues: Systems like RabbitMQ or Apache Kafka store and manage background tasks and ensure they are processed in the correct order.

- Task Queues: Frameworks like Celery (for Python) or Sidekiq (for Ruby) manage tasks and allow for retrying failed tasks.

Asynchronous processing is particularly useful in applications that handle large volumes of user-generated content or complex computations.

7. Design for Failure and Implement Redundancy

Ensuring high availability is essential for systems with millions of users. Key principles include:

- Redundant Architecture: Duplicate critical components (e.g., servers, databases) to avoid single points of failure.

- Health Checks: Regularly monitor the health of servers and services, automatically rerouting traffic from failed components.

- Automated Failover: In the event of a server or database failure, traffic should automatically switch to backup resources without affecting user experience.

Cloud platforms like AWS, Azure, and Google Cloud offer built-in solutions for redundancy, such as multiple availability zones and failover configurations.

8. Monitor and Analyze System Performance

Monitoring tools provide visibility into system health and performance, allowing you to identify and resolve issues before they impact users. Essential monitoring metrics include CPU usage, memory, disk I/O, network latency, and error rates. Key tools for monitoring include:

- Prometheus: a widely-used open-source tool for monitoring systems, offering a flexible query language for detailed performance analysis and metric tracking.

- Grafana: A visualization tool often used with Prometheus for creating dashboards.

- ELK Stack (Elasticsearch, Logstash, and Kibana): Useful for log analysis and real-time analytics.

Setting up alerts based on performance thresholds ensures that your team can respond to issues promptly, minimizing downtime and improving user experience.

9. Plan for Future Growth

Scalability is an ongoing process. Regularly review your system architecture and assess if it can handle projected growth. Consider setting up automated scaling in cloud environments, which can add or remove resources based on real-time demand. This elasticity is especially helpful for applications with varying traffic patterns.

Continuous integration and delivery (CI/CD) pipelines also play a crucial role, allowing you to deploy updates efficiently and roll back quickly if issues arise.

Conclusion

Scaling a system to support millions of users requires a comprehensive approach that covers database optimization, caching, load balancing, microservices, and failure management. Implementing these strategies not only prepares your system for growth but also enhances reliability and performance, leading to a better experience for your users. With continuous monitoring, proactive planning, and a flexible architecture, you can ensure that your system remains responsive and resilient as user demand increases.